Will AI cannibalize open-source?

As an open-source maintainer, I'm already seeing a shift in how projects are managed. More and more LLM-generated pull requests are appearing, piling up in an endless queue of PRs to review. This forces me to think about the future of open-source projects and their sustainability.

Open source has always been more than just code. It's a movement or a philosophy based on human reciprocity, voluntary contribution, and attribution. But even before AI, the burden of maintaining unpaid OSS was already high. In the AI era, the long-term incentives that sustained this ecosystem are being eroded.

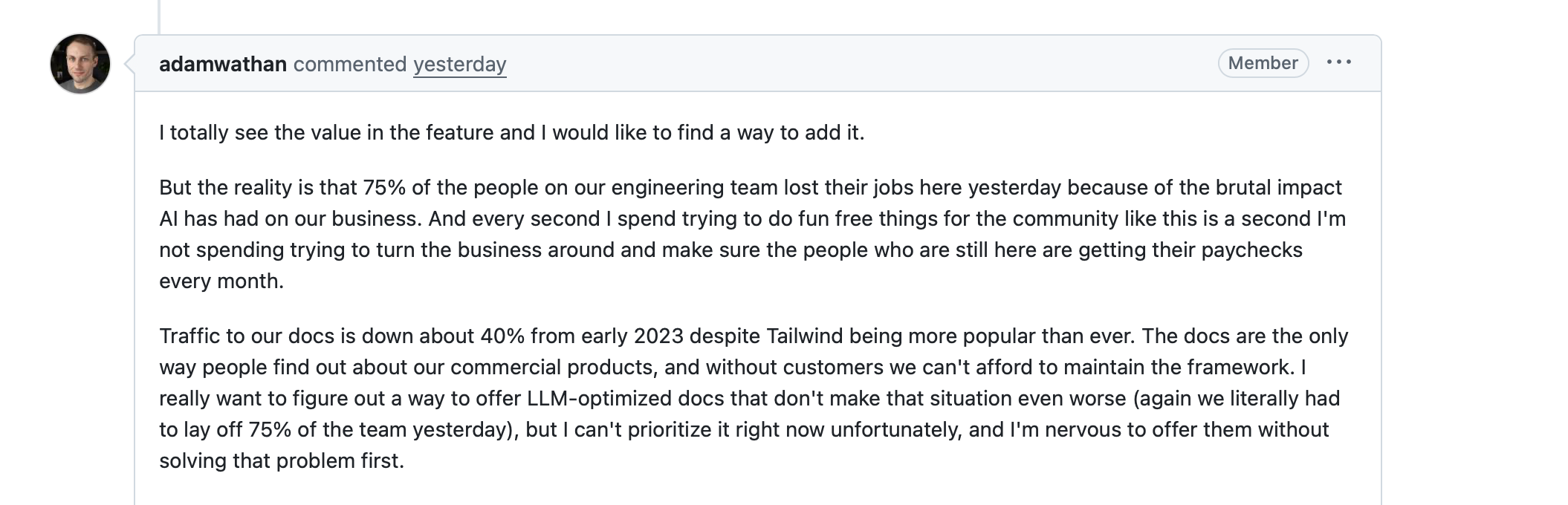

We are starting to see concrete signals of this shift. In a recent GitHub discussion on the Tailwind CSS project, founder Adam Wathan explained that 75% of their engineering team had been laid off, explicitly citing "the brutal impact AI has had on our business."

This is the canary in the coal mine, and it makes me believe AI will significantly reshape the open-source landscape.

As I mentioned, OSS was built on human reciprocity, attribution norms, and long-term maintenance incentives. LLM training broke that social contract without technically breaking licenses on its training phase. It's extraction without replenishment. The vibe is closer to the United Fruit Company than to collaboration: value is harvested at scale, but little flows back to the communities that produced it.

Open-source was always about attribution and recognition not just about credit; it's a reputation, a hiring signal, and a justification for funding. When AI produces Tailwind-like utility classes or React patterns without naming the source, it strips OSS of its career and funding flywheel. The code survives, but the social and economic mechanisms around it weaken.

AI lowers the value of framework knowledge, commoditizes "how-to glue code," and shifts value away from libraries toward distribution, hosting, and integration. Open-source companies that relied on documentation and examples are going to suffer. Conversely, OSS companies that monetize infrastructure, hosted services, or data are much more resilient.

Maintaining open-source projects is becoming harder: dense, long PRs accompanied by a single-line comment. This creates a massive code-review burden for maintainers. We're heading toward AI-generated code, AI-triaged issues, and AI-reviewed PRs, with human maintainers acting as the final arbiters.

Or, as Cory Doctorow says in his post, we become an "accountability sink." The engineer's job won't really be to oversee the AI's work; it will be to take the blame for the AI's mistakes.

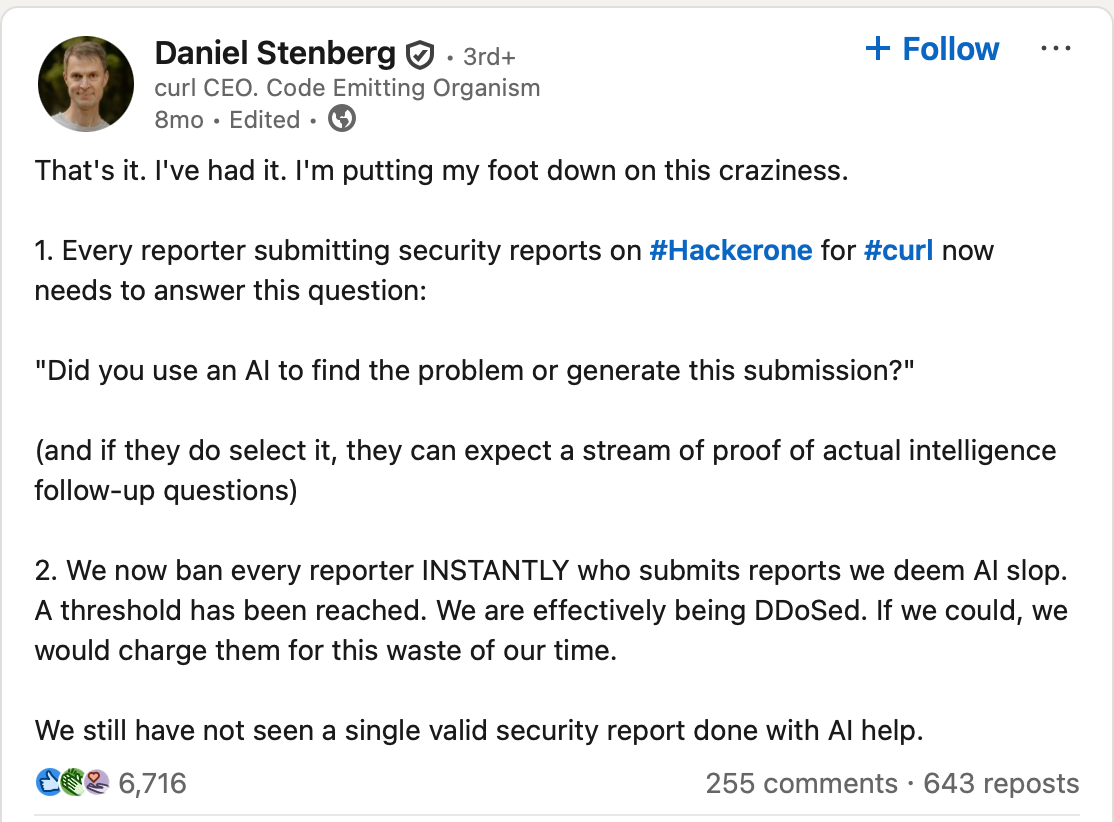

There is, perhaps, a more optimistic path. AI could lower the barrier to entry, allowing anyone with an idea to bring it to life. It may eventually automate the "toil" of dependency updates and CVE patches. However, until we solve the noise problem, the outlook remains grim. As Daniel Stenberg (creator of curl) noted, curl project is effectively being "DDoSed" by AI-generated bug reports, making the work of human creators less sustainable than ever.

My take

Big open-source projects, especially those backed by companies like Kubernetes, Linux, Postgres, or Grafana won't disappear. They are too deep, trusted, and institutional. They will become even more critical. However, small projects, especially non-paid ones or those based on boilerplate creation, documentation, and conventions are the most at risk.

I believe more projects will go closed source. To maintain a competitive advantage, companies will decide not to share their code to train an LLM. We will see more SSPL-like licenses, "no-training" clauses, and similar restrictions.

For us maintainers, governance will harden. I foresee "AI-generated code disclosure" rules, stricter PR templates, or even explicit bans on LLM-generated pull requests.

AI won't kill OSS, but it will break its incentive model. OSS will survive, but with fewer hobbyist maintainers, more foundation-backed projects, more corporate control, and more automation in governance.

Bad times for the idealists.